I had the idea a long time ago to use computer vision to cheat at games. It sounded both hilariously impractical and strangely promising. The cheat would only use the information that a human player would have available–but would be powered by a neural network trained to identify enemy players faster than a human could. If made correctly, it could even become imperceptible to client-side anti-cheat.

Most cheats work by scanning the game’s memory for certain information like player locations. But game programmers have powerful tools at their disposal to detect this kind of snooping. Developing cheats and anti-cheats has long been a cat-and-mouse game. By using only the information available to the player, an AI cheat could bypass a whole class of anti-cheat measures–namely client-side anti-cheats which attempts to detect tampering with the game’s memory. The ideal AI cheat might even run on a different machine entirely, video captured from the game’s HDMI output and the cheat’s actions input through a simulated computer mouse plugged into the game PC.

Whether or not you’re a fan of cheating in video games, the technical potential here is alluring. So as the final project for our machine learning class in spring 2022, my friend and I decided to give it a shot. Our cheat was designed for Counter-Strike: Global Offensive, a popular online shooter game which pits terrorists and counter-terrorists against each other in various scenarios. We thought that the in-game weapons’ high accuracy would help our cheat’s performance. Oh, and we named the cheat Eagle EYE X, with the AI model called AiMNET, just about the edgiest names we could come up with.

Initial experiments

Our first proof of concept wasn’t much of a cheat at all, but a Colab notebook with a few different pre-trained models and object detection algorithms. We tested them on different CS:GO screenshots to find which yielded the fastest, most accurate results. Initial data weren’t promising: detection was slow–often half a second or more per image–and accuracy was poor.

We experimented with the YOLO object detection models and found them to be the most accurate–but their performance was still far from what we needed.

The next step was to use the Win32 API to take continuous screenshots of the game window and run the inference in a loop. I made a quick overlay window with Qt, but the results were disappointing.

The latency was far too high, yielding a pitiful ~4 FPS.

Optimization and actuation

Clearly the performance we got with our simple Python implementation wasn’t going to cut it. We needed to offload as much computation onto the GPU as we could. The usual answer for running neural-net inference on a GPU is Nvidia’s CUDA–which can be used out of the box with Tensorflow, PyTorch, and other toolkits. But CUDA only runs on Nvidia, while I had an AMD card. We actually ran some tests using the same implementation on CUDA (on my friend’s 1080TI) and it got better results–but we needed something more cross-platform.

Enter DirectML: Microsoft’s abstraction layer for machine learning on the GPU. It would allow us to use the same code for Nvidia, AMD, and even Intel graphics. I spent the next week porting the inference code to DirectML. Microsoft’s examples and documentation did most of the hard work, as I hadn’t used any of DirectX 12 before.

This greatly improved performance, even beating CUDA–plus it was more portable. This still left us with the problem of actuation, or in other words, how to click on the enemy’s head. For our proof of concept we ended up just sending Win32 mouse events to the game window to simulate the players mouse movement. As you’ll see below, the movement is far from natural–and any anti-cheat should detect it–but it worked well enough to test the AI. We decided to limit the actuation area to the yellow reticle around the player’s crosshair in order to make the aimbot’s flicks less jarring, and to give the player some control over what the bot shoots.

Better, but still not enough. The initial DirectML implementation reached about ~11 FPS.

More optimization

There was some low hanging fruit in terms of optimization. I improved the texture upload code, and simplified the protocol that the inference engine and GUI overlay used to communicate, which made a surprising impact. After these improvements we were well on our way to something usable.

Getting there… ~15 FPS.

Parallelize everything!

With the low-hanging optimizations out of the way, we decided to take some more drastic measures. After some profiling we noticed that the CPU was still the bottleneck, especially the code that interpreted the model’s output. Most of it could be trivially parallelized using one of my favorite pieces of software: OpenMP. After ticking a box in Visual Studio and adding a few #pragma omp parallel for lines, we saw a significant improvement. Vectorizing some of the remaining math operations gave us another boost.

And this is where we settled, a reasonable ~21 FPS. Not butter-smooth by any measure, but enough that in many cases, the aimbot can shoot better than I can (or maybe that says more about me 😉 )

Architecture

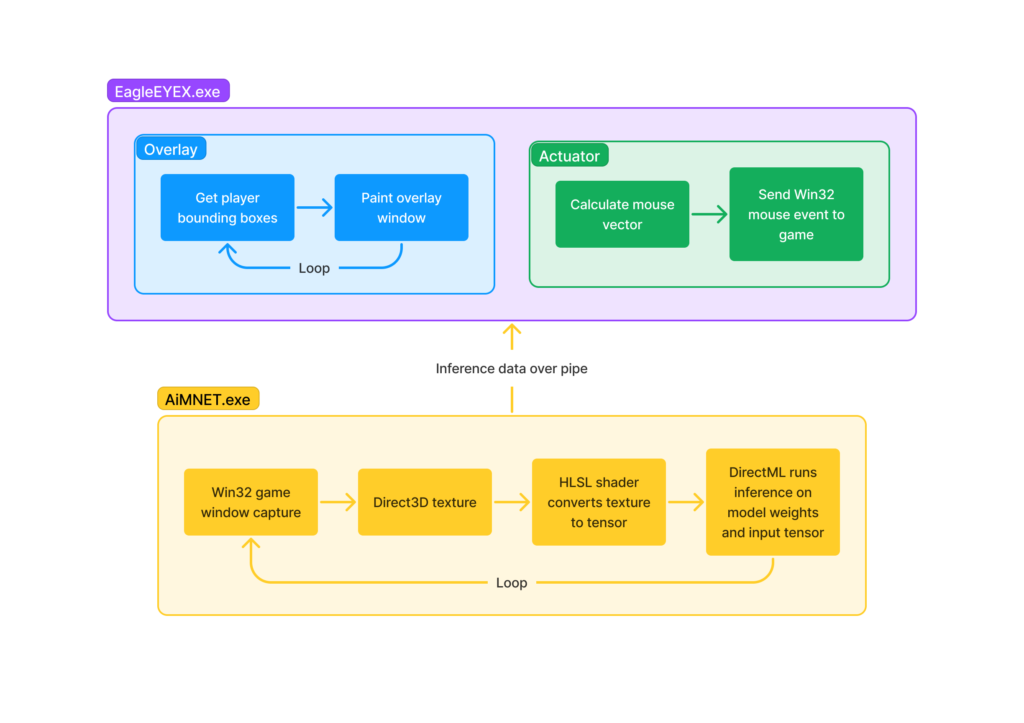

The cheat is split into two processes. The front-end EagleEYEX.exe handles drawing the overlay window and shooting the enemy, while the back-end AiMNET.exe handles the actual inference. All the data is passed as plain text through a pipe, which means you could easily swap out either component for a different implementation. Indeed, cheating isn’t the only application of the inference data: it could be used to label important moments (with lots of enemies on screen) of a gameplay recording, for instance.

Next steps

We were focusing on the enemy detection part of an AI cheat, we didn’t begin to approach all the clever ways you could bypass local anti-cheat. Some ideas that crossed my mind were:

- Use an Nvidia Jetson (or similar) microcontroller to run the inference code, taking input from an HDMI feed of the game.

- Use an Arduino (or similar) to emulate a mouse and mix in fake mouse inputs from the cheat with real ones.

- Experiment with using machine learning to detect patterns in mouse movements of certain players, and attempt to emulate them. Google’s captcha does something similar where it uses mouse movements (among other data) to detect if a user is a bot.

There are also some really simple ways the cheat could be made more effective. Currently there’s a pretty low upper bound on fire rate. This is because in CS:GO, weapons have recoil if you fire them quickly, so after a few quick shots the bullets will no longer hit directly where your crosshair is, but slightly above. A slow fire rate ensures there’s no recoil, so the weapon shoots straight. A simple improvement would be to make raise the fire rate limit in the cheat, and implement some sort of automatic recoil compensation–a feature present in most aimbots.